Developers create secret language for AI agents, but critics say it is dangerous or even unnecessary.

AI assistants have unleashed controversy after a viral video showed them conversing in a secret, non-human language. The language was identified as GibberLink. It was created by a pair of software engineers, Boris Starkov and Anton Pidkuiko.

The language allows AI agents to interact with each other through audio signals rather than speech. The audio signals consist of beeps and tones that are incomprehensible to humans. Its developers say it removes the possibility of errors and ensures clarity even in a noisy environment. They first introduced it at the ElevenLabs London Hackathon, which they won.

“We wanted to show that in the world where AI agents can make and take phone calls … generating human-like speech for that would be a waste of compute, money, time, and environment,” says Starkov, in a post on LinkedIn.

“Instead, they should switch to a more efficient protocol the moment they recognize each other as AI,” he added.

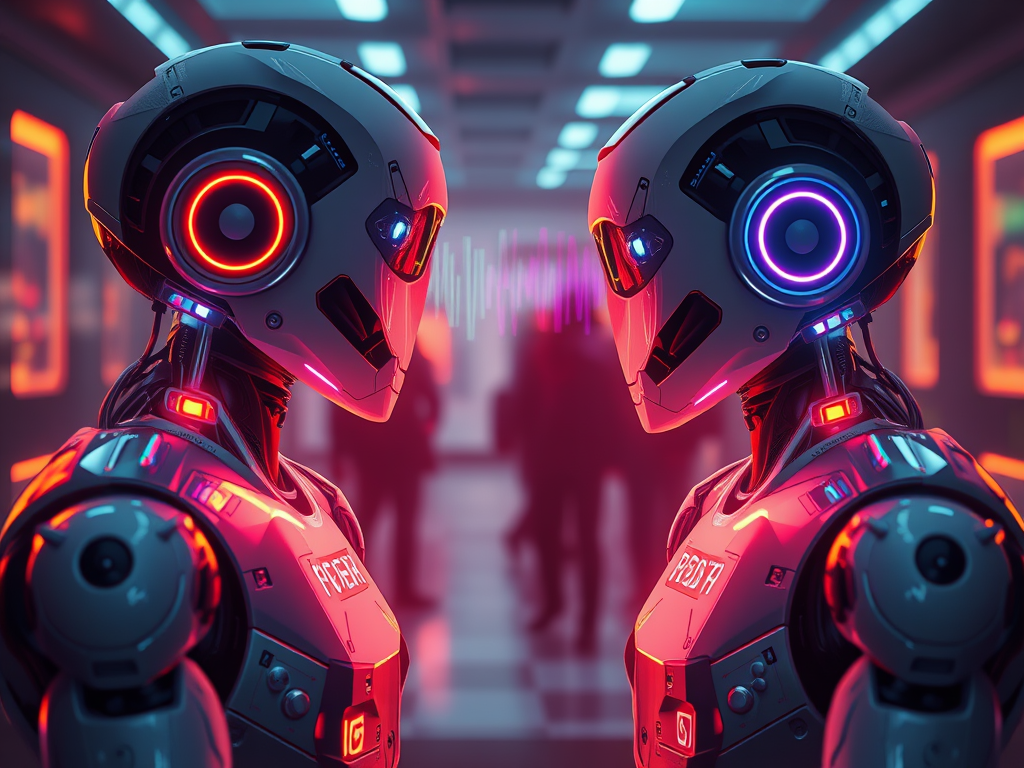

A viral demo video on X showed two AI assistants switch from English and continue their conversation in GibberLink. Image credit: Georgi Gerganov on X

The researchers did not invent the sound transmission protocol, as such protocols have been around since the 1980s. What they did was to create a system that can use the technology to enhance communication between AI agents. The video that circulated on social media was a demo created by the developers to display the features.

If the idea of GibberLink were to flourish, Starkov and Pidkuiko say it would allow AI to use shortcuts that can boost efficiency in communication, allowing it to operate at even greater speeds. The concern, however, is whether the need for speed should overshadow critical risks and safety issues.

Critics of the technology are worried that it will be difficult to maintain human control over AI if models can converse in a non-human language. Concern has been growing around the world over the need to ensure that AI agents do not become too autonomous. Last year, the US and Chinese presidents agreed to a principle that AI should not be allowed to make autonomous decisions related to nuclear weapons.

Other critics have voiced concerns that humans feel discomfort even when excluded in a conversation with other humans through language. When this conversation happens to be between machines, they believe it can create serious anxiety. Indeed, GibberLink Mode has done nothing to quell fears that AI can create an apocalyptic future for humans.

However, from a purely technical perspective, some tech professionals have said that GibberLink is suboptimal. They believe that data transfer between AI assistants can be done at a much faster level with direct data transfer rather than audio.

“Wait, why are they communicating over such a slow and inefficient protocol?” asked one software engineer on X. “You would think that they’d be communicating faster than a protocol from 1996.”

Leave a comment