The big tech company has abandoned its commitments to AI safety, raising serious concerns…

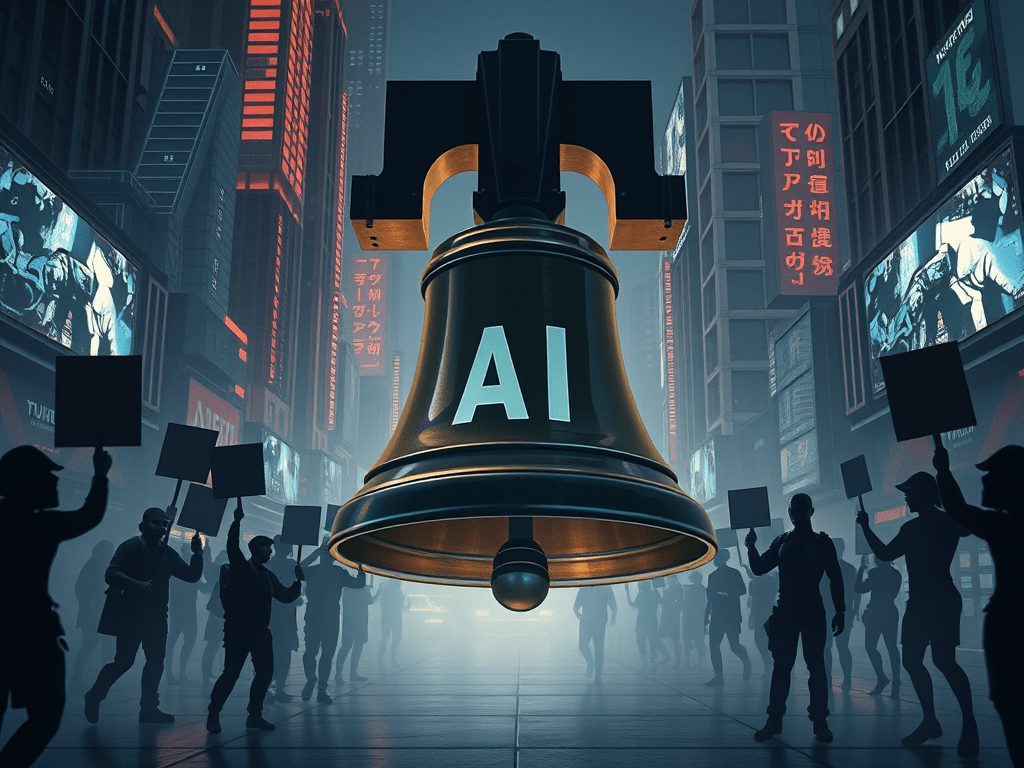

Experts and activists are sounding alarm bells as Google and the rest of big tech are appearing to choose profits over AI safety.

Last week, Google updated its ethical guidelines and removed commitments not to use AI for weapons or surveillance technology. The company had introduced those commitments in 2018 as part of an overall pledge to avoid applying AI technology in ways “likely to cause overall harm.”

Earlier this month, members of the Bulletin of the Atomic Scientists sounded a warning that the world is now 89 seconds to midnight following pressing global issues, including the use of AI in destructive weapons.

Meanwhile, Geoffrey Hinton, Nobel Prize winner and one of the pioneers of artificial intelligence, has criticized Google for the move. He called it “another sad example” of companies abandoning AI safety for profits.

The Godfather of AI left Google in 2023 because he wanted to be free to criticize both the company and the rest of big tech for any decisions that threatened human safety.

Google believes that applications of AI in weapons and surveillance is necessary for the national security of free countries. However, it comes after the US and China started talks to counter what they believe to be the serious threat of AI military application to humanity. In November 2024, President Biden and President Xi Jinping of China collectively endorsed the principle that AI should not be given control over nuclear launch decisions.

The concern is that once the AI movement starts off on this military route, it may be difficult to control, even by superpowers.

Those fears have pushed protesters out onto the streets, calling for governments to double down on AI safety. This includes PauseAI campaigners, who are active in Western countries.

“It’s not a secret any more that AI could be the most dangerous technology ever created,” says PauseAI founder Joep Meindertsma. The movement hopes to make enough noise to ensure that AI safety is taken seriously.

However, while groups hoped that the global AI summit in Paris would yield positive news on the AI safety front, US Vice President JD Vance instead accused Europe of excessive regulation of AI. He further added that the EU would have to abandon red tape if it was to work with the US in further development of AI.

AI has also shown various positive applications, including helping to discover new antibiotics and antimicrobial peptides that could help humanity fight stubborn germs.

Leave a comment