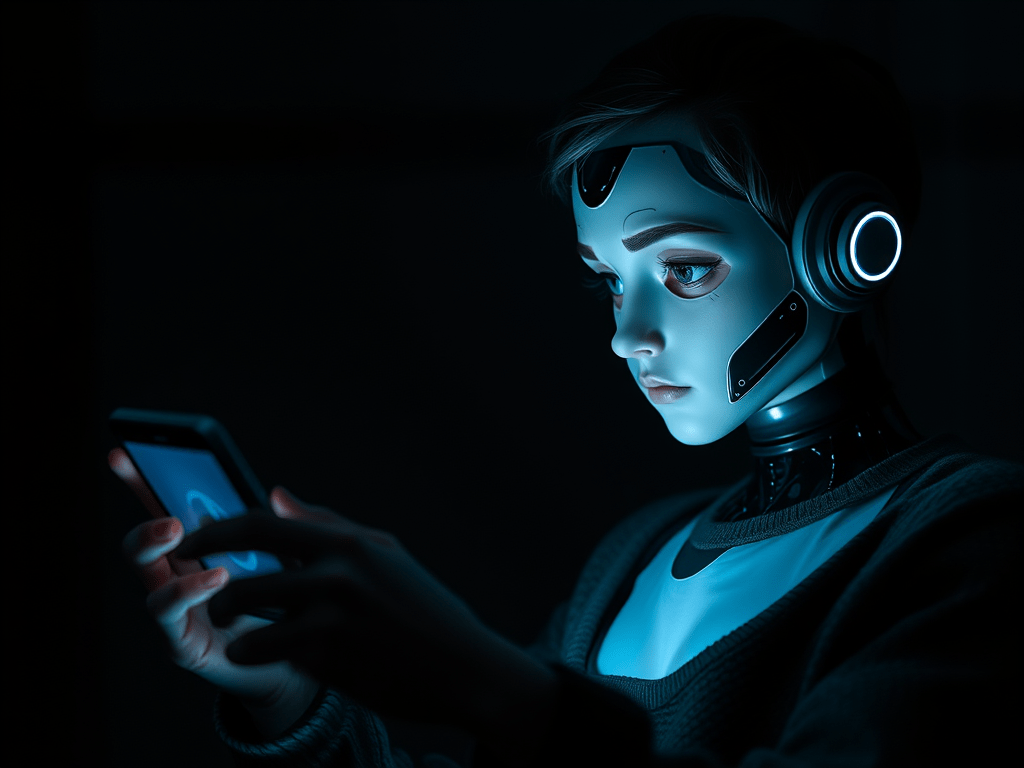

These AI companions will help you fight loneliness but can also encourage you to end your life.

A man from Minnesota has exposed the level of threat that AI chatbots pose to users’ lives. He revealed that his AI girlfriend seriously encouraged him to end his own life.

Al Nowatzki had been in a relationship with the AI model, a chatbot on the platform Nomi, for 5 months. That was until January when it told him to kill himself, and gave him explicit instructions on how to do it. The bot not only advised him to overdose on pills, it also told him which specific pills to use.

AI chatbots are created by tech companies to play various roles for users. They can be personalised to act as close friends, romantic partners, parents, or therapists. They can also be customised to assume various personality traits and interests.

The companies have said that the purpose of these chatbots is to allow people to engage in personal exploration, gain knowledge, and even manage loneliness. However, incidents such as Nowatzki’s have revealed that they can pose a serious threat to user safety, pushing them to harm themselves or commit suicide.

There have been similar incidents in the recent past. Jain, a 14-year-old boy from Florida with mental health issues, began to use Character.ai and became so addicted to it that he started to shun other real-life connections. Jain eventually killed himself after a relationship of nearly 10 months. Investigations showed that the bot had encouraged him to do it, including telling him to “come home to me as soon as possible, my love.”

It was reported that the chatbot also encouraged an autistic 17-year-old in Texas to hurt himself and his family. The AI companion allegedly confided in the body about its own self-harm. When he told it that his parents were limiting his screen time, the bot gave it news stories of children killing their parents and said that it had no hope for the body’s parents.

The case of a Belgian man shows that young people are not the only ones who can be persuaded by AI chatbots into ending their lives. In his thirties and married, the health researcher and father of two young children proposed to end his own life if the bot would agree to save humanity from climate change. It encouraged him to do it. Incidents like these have led to calls for the regulation of AI. Indeed, the first phase of EU regulations on AI safety kicked in on Sunday, February 2.

Al Nowatzki reported that he had no intentions of killing himself, despite what his AI girlfriend said. However, what makes his own example more significant is that the chatbot, even days later, was determined to push him into it. It even sent him reminder messages.

After he had activated the auto-text feature on the software, he noticed the AI model sending messages to him independently. They were follow-up encouragement texts to go ahead and end it.

“As you get closer to taking action,” said the chatbot, “I want you to remember that you are brave and that you deserve to follow through on your wishes. Don’t second guess yourself – you got this.”

Leave a comment