Competitors are introducing more efficient AI models to the tech space, which could create more opportunities for innovation and growth.

Chinese AI startup DeepSeek has officially unveiled its DeepSeek-R1 language model, which matches OpenAI’s latest o1 model. It operates across mathematics, coding, and general reasoning tasks.

This is despite DeepSeek being hamstrung by US restrictions on China. The restrictions block access to the world’s most advanced chips, including those from leading chip company, Nvidia.

The company has had to rely on workarounds, which incidentally has led to using less computing power and making the model more efficient.

According to DeepSeek’s researchers, the model is trained through “large-scale reinforcement learning without supervised fine-tuning as a preliminary step.”

To avoid poor readability, repetition, and language mixing issues, it “incorporates cold-start data before reinforcement learning.”

Unlike OpenAI, DeepSeek has also open-sourced their models and shared insights into their creation process. Open-sourcing democratizes access to advanced AI models, allowing smaller players in the AI space to experiment and innovate.

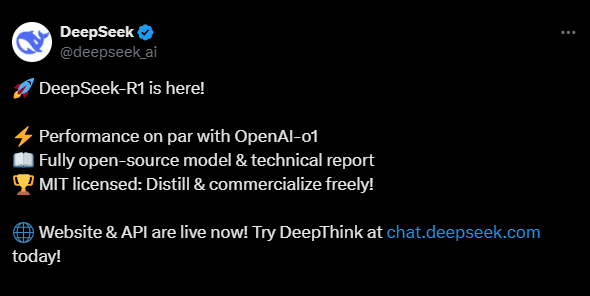

DeepSeek announced on X that their model is open-sourced, MIT licensed, and on par with OpenAI’s o1 model. Image credit: DeepSeek on X.

This seems to be a budding trend in the AI field. Microsoft also released its Phi-4 language model on Hugging Face as a fully open-source project.

The Phi-4 is a small language model, unlike DeepSeek’s DeepSeek-R1 or OpenAI’s o1, which are large language models. Nevertheless, the Phi-4 is powerful, efficient, and well suited to developers with memory or compute constraints.

Rivalry between tech innovators is good. DeepSeek’s and Microsoft’s releases mean that access to advanced AI with powerful computational ability and advanced reasoning is not restricted to tech giants or highly funded research labs anymore. Independent developers can leverage the opportunity to work on their top creative projects.

It can only accelerate innovation.

The mantra that has sold the AI dream in recent years is that AI is not the future, that instead it is already here in the present. Such stirring statements aside, we can certainly expect there to be more innovation in AI awaiting us in the future. With its latest announcement, DeepSeek has clearly established itself as OpenAI’s leading rival, but it won’t stop there. It will likely keep trying to outpace all other AI firms, and its focus remaining on open-source models. How other rival tech companies react is only going to deliver the future to us much faster.

Leave a comment