Apple has suspended its new AI feature due to repeated mistakes in headline summaries. But it’s not the only one, and stakeholders are deeply worried.

Apple has suspended a new AI feature on its iPhones. The feature has drawn a lot of complaints due to its repeated mistakes in headline summaries.

The feature was available on Apple’s recent devices using the iOS 18.1 system version or later. It provided notifications containing AI-powered summaries of headline news, but those summaries were often misleading, and sometimes flat-out wrong.

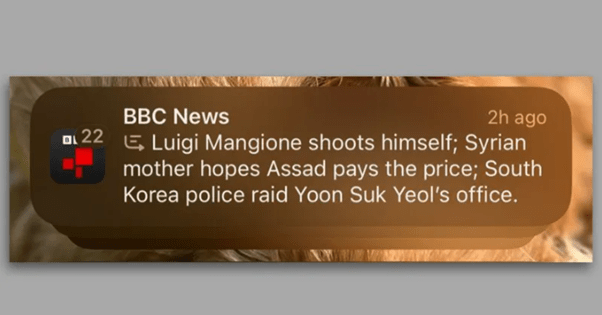

Its summary of the BBC News on Luigi Mangione, who is alleged to have murdered healthcare insurance CEO, Brian Thompson, in New York, made it seem as though the news outlet had reported that Mangione had shot himself.

The AI feature falsely shows BBC News reporting that Luigi Mangione committed suicide. He did not. Image credit: BBC News

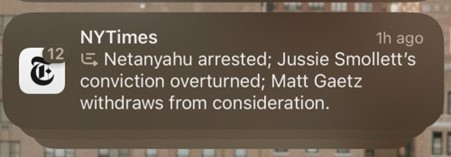

It also misrepresented a news report from the New York Times on Israeli Prime Minister, Benjamin Netanyahu. It said that Netanyahu had been arrested, whereas the Times only reported that the International Criminal Court had issued an arrest warrant for him.

The New York Times reported that the ICC had issued a warrant for Netanyahu’s arrest, but Apple’s AI feature falsely presents it as an actual arrest. Image credit: Ken Schwenke on Bluesky

There have been other examples from more big media outlets such as the Washington Post and Sky News.

The concern for such news groups is that tools like these can put a big dent on their credibility. With complaints mounting, Apple has finally been pressured into withdrawing the feature. According to analysts, with Apple rarely ever responding to criticism, its open admittance of the problem and agreement to withdraw it “speaks volumes” about how bad the errors were.

More importantly, it highlights the inherent problem in using artificial technology to provide sensitive and delicate information.

The Reporters Without Borders (RSF) group declared that the Apple incident has shown generative AI to be “too immature to produce reliable information for the public.”

But public news is not the only thing stakeholders are worried about. After all, the errors in Apple’s feature were immediately noticed because they were public.

What about information that people are searching for privately?

The surge in generative AI usage has led to big tech companies replacing blue link results with AI search. But the fundamental problem, says MIT Technology Review’s Mat Honan, is that AI models can mix up facts and confidently supply people with false information, yet big tech hasn’t slowed down in adopting them anyway.

Responding to a query, Google’s AI Overviews said that MIT Technology Review launched its online presence in late 2022. Apparently this confused the question for its newsletter, which is what was launched in 2022. It didn’t know the answer but nevertheless it responded with full confidence, and unwary users easily just run with that.

Many people are embracing these models for search and relying on their output for the information they use every day. Until tech companies provide improvements that will make them more reliable, as they have been promising, experts like Mat Honan are worried that reality and facts can be seriously skewed.

Apple’s suspension of its new AI feature shows that it recognizes the problem. The vital next step is what they, and other big tech companies, will do to correct it.

Featured image credit: Andrea Piacquadio on Pexels

Leave a comment